I am a product management and product marketing consultant that’s been focused on WebRTC for the past couple of years. I am not a professional developer. This is how I won $1000 as an amateur at the TADHack.

My background

I did go to school for Electrical Engineering and did some coding there (mostly assembly 😮 ), but I have been on the marketing side of communications companies for pretty much my whole career. I am fortunate that I get to interact with a lot of great engineers. While I am envious of what I see many of them do, I have no plans to become a professional developer one day. I started programming again as an occasional hobby a few years ago and have done a couple “hacks” for webrtcHacks on my own (lengthy) timelines – mostly as a fun way to learn and get hands on with some of the technology. Now it was time to give a hackathon a serious try.

Starting Constraints

I attempted to do the TADHack last year, but didn’t really know what I was doing. It was a great learning experience, but I gave up after wearing myself out and never submitted anything. This time around I decided to get a lot more focused and decided on some guidelines for myself beyond the official rules:

- Make something cool – maybe this is obvious, but it was important I found a project that I thought was cool to keep me engaged and make me smarter when I was done

- Do something with WebRTC – I’m a WebRTC guy, so this was also a given

- Don’t spend a lot of time coding – unlike some hackathons, the TADHack is pretty lacks with timing and there is no official start time. Once I start coding it’s easy to get sucked in, so I set some firm timelines – spend a few hours looking at the sponsors Friday afternoon, start coding at 6PM on Friday, and stop coding by 4PM on Saturday

- Play to win – the potential for $1000 is a great motivator

Coming up with a great idea

I usually have a bunch of good ideas in mind, but I struggled with this this time. I am very interested in the intersection of the Internet of Things and WebRTC, so started out by researching Intel’s Edison. Unfortunately I didn’t see a quick way to put WebRTC on the Edison, so I abandoned this as it would violate my 3rd guideline.

My past couple of hacks were aimed at solving some household problem I came across with WebRTC. The days before the TADHack happened to be having some problems with the dehumidifier in my basement. It would randomly turn off after being on for many hours. It supposedly would flash an error code before it turned off, but that does not do you much good unless you happen to be there staring at it. That gave me the idea of building a generic app that could alert you when something of interest happens visually.

I was hoping to have something more compelling in mind before I started on Friday, but I ran out of time. Fortunately the ideas started flowing once I dug in.

Design & Development

Ideally I would have architected a design, selected the components I wanted to work with, and then code everything together. Reality was much more messy – I had a rough design in my head and a few starting elements:

- Try keep everything in JavaScript as much as a I could

- Use jQuery and Bootstrap to help with the UI elements since I had taken some w3schools tutorials there

- Reuse a motion detection library I modified from a previous project

Choosing a Sponsor Platform

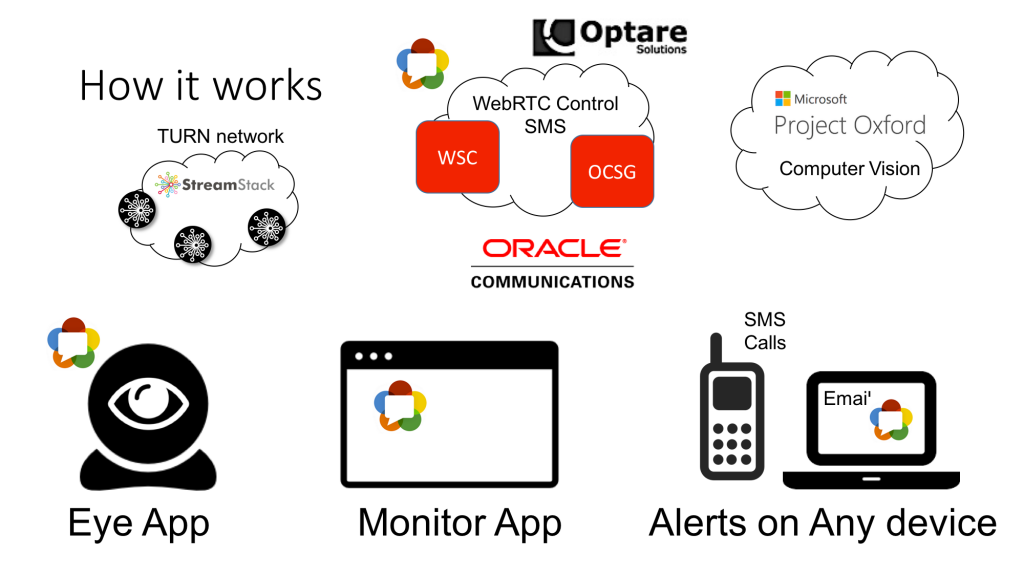

Keeping with my goal of not spending a lot of time, I was looking for a sponsor Platform-as-a-Service that was quick to work with. I didn’t want to spend time setting a web server. I needed WebRTC support and the ability to text message and make calls would be nice. I ended up using on Oracle Communications in Optare’s TADHack sandbox. Optare had Oracle’s WebRTC Session Controller (OCWSC or WSC) that I could use for WebRTC signaling and Services Gateway (OCSG) for sending text messages – both elements I needed without requiring any installation or server-side coding.

Their main sample app was a modified version of Mozilla’s together.js using the WSC. It took me a few hours to extract and modify the code and then I was easily making WebRTC calls at will.

Oracle’s Anton Yuste was a great help in giving me a CURL example for using the OCSG to send a text message. From there it was easy to convert this to an ajax call.

Full disclosure: I worked at Oracle Communications for a few months after it acquired Acme Packet. I helped launch the WSC 2 years ago, but I never actually used it before and this was my first hands on exposure to it and the hosted sandbox offering by Optare Solutions.

General Architecture & Flow

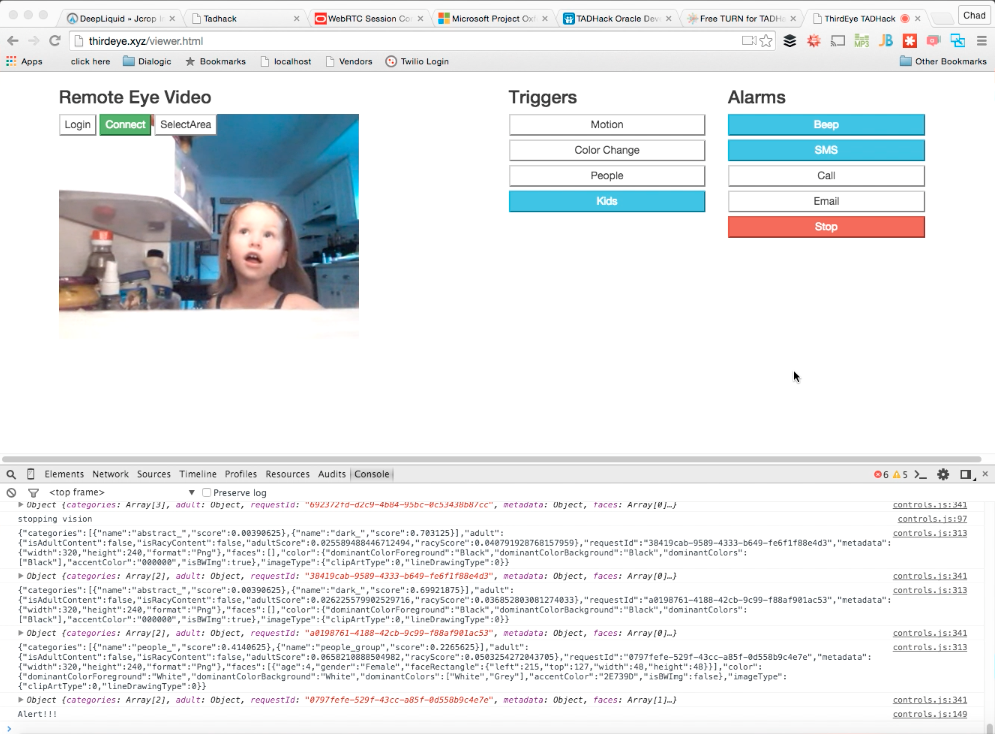

The app works like this:

- Setup your remote eye on the object/location you want to watch – this is just a WebRTC camera device, like an Android smartphone with Chrome (it would be nice to make this an embedded device oneday). You need to login to the WSC to authenticate then you can start a WebRTC video session.

- Set up a viewer on a WebRTC supporting browser – again you need to login to the WSC to authenticate before the using the “Connect” button to establish the WebRTC peer connection to the eye

- Pick from one of several triggers on the viewer – I started with a basic motion detection trigger

- Pick from one or more alarms – I started with a Web Audio beep and added the OCSG text message

- Press “start” on the viewer to initiate the triggers

- When one of those goes off it will activate the selected alarms

The real point is to be able to put a low-powered remote camera anywhere you want and have a separate viewer app handle all the heavy lifting.

Putting it together

I stayed up until about 5:30AM on Friday night after needlessly wasting several hours starting cross eyed at my screen trying to accomplish something I can’t remember at this point. Whatever it was I fixed it quickly after I got some rest. By Saturday morning I had most of the above in place. My son had a birthday party mid-day that Saturday, which forced me to take a break for several hours.

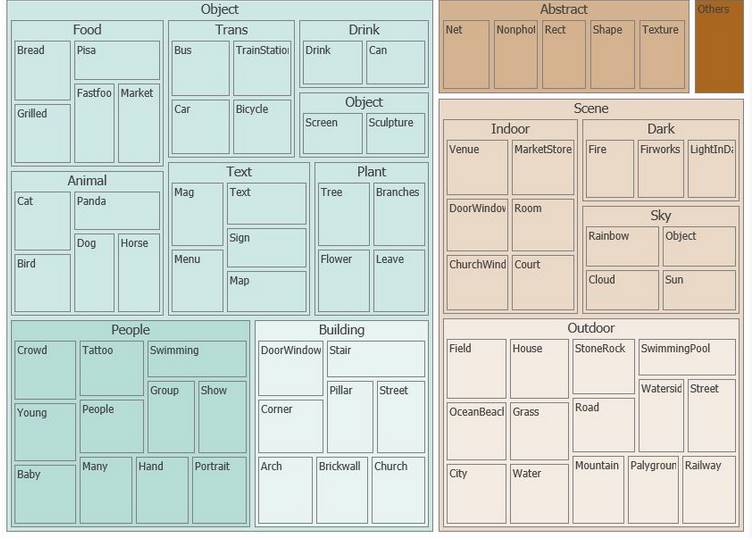

Fun Stuff – Computer Vision

I felt pretty good at this point, but my application didn’t do much more than my motion detecting baby monitor experiment I had put together almost 2 years ago. I had been wanting to play around with Microsoft’s Project Oxford Computer Vision API’s for a few weeks after learning that was what was powering the viral how-old.net photos everyone was posting back in May. I had a few hours to go before my self-imposed 4PM end-of-coding deadline, so I decided to give it a try.

I ended up finding a sample application of how-old that uses WebRTC’s getUserMedia by Mircea Piturca. It turned out access the Project Oxford API’s was pretty simple. Here’s how I did it:

- Grab a picture – I used Mozilla’s guide on Taking still photos with WebRTC as a reference. I limited this to once every three seconds so I would not exceed my free usage quota on the API

- Then used this data blog with the how-old with WebRTC code mentioned above to convert this to the proper format and make a REST API call to Microsoft’s Azure to access the API

- Form there I just had to monitor the API response to see what it noticed

The Computer Vision API detects a lot of stuff. I could theoretically alert on pets (which would have helped out here). I decided to do detection on people in general and faces. The hardest part was sorting through the massive amount of data this API returns.

Like the how-old app, the computer vision API returns an age whenever it sees a face. This gave me an idea – my daughter had been secretly raiding the refrigerator when we weren’t looking. Could I use the API to detect when she opens the fridge but no one else?

If I had more time there is a ton more I could have done with this API.

Naming & hosting

I started doing all my tests locally but throughout the process I realized it would be help to have the eye and viewer apps hosted on a web server. This was also a good time to find a name for it, so settled on ThirdEye and was able to get the thirdeye.xyz domain for a couple of bucks. I bought a “droplet” on DigitalOcean to host my HTML, CSS, and JS files for $5.

Final Testing

Now it was time to do some final tests to make sure the computer vision API worked reliably for a demo (the other API’s were solid at this point). Fortunately my kids were eager to help out after I had been ignoring them most of the day.

It took some more timing and UI tweaks, but everything was working reasonably well and I was only about an hour past my 4PM deadline (so much for deadlines). Now it was time to get ready for my submission.

Remote Submission

As a remote entrant, I figured having a good video was important since there is no way to interact with the judges. I figured the easiest thing to do was to take a bunch of videos covering various aspects of the hack and editing it together. I ended up with far too much video to go through for 5 minutes. This ended up taking 3 times longer than I expected, mostly due to poor planning wasting time trying to get my video editing software to work with QuickTime.

I finally got everything submitted around midnight (ET) on Saturday after about 28 total man-hours of effort, including a couple of hours before the event researching ideas that I didn’t end up using.

Try it Yourself

I am still hosting the site for the time being, so you can try it yourself (for demonstration purposes only):

Eye app: http://thirdeye.xyz/eye.html

Viewer app: http://thirdeye.xyz/viewer.html

See my github page for the code: https://github.com/chadwallacehart/ThirdEye

Winning

I found out I won that Tuesday and got my check later that week. Sweet! $1000 goes a long way to make you feel better about losing a night of sleep.

My Take-aways

- TADHack is really about hacking things together – there are so many interesting things you can do quickly by combining API’s from different sources and Telecom API’s are a part of that

- Use the sponsor’s help – it’s certainly easier to find a sponsor when you are on-site with them, but my experience interacting with Oracle over email was great. In retrospect I could have probably save an hour or two if I had asked some questions or had a short conversation with Anton on Friday afternoon

- Get some sleep – I could have used Andrey Zakharchenko’s Drowsy Monitor TADHack-mini London app to tell me to go to bed; I wasted several hours in zombie land for no reason instead of getting some rest

- Video editing take a lot of time – I should have scripted a 5 minute video and taken care of the whole thing in a couple of takes

- You don’t need to be a professional developer to win – I am certainly a TAD insider, but it was certainly refreshing to see so many amateur developers in the winners list and there are plenty of prizes to go around

Thanks to Oracle for their support during the event and Alan Quayle and the other TADHack organizers for putting on a great event!

One thought on “How a Marketing Guy Won TADHack with ThirdEye”

Comments are closed.